Plain Concepts

We are a global IT professional services company

Introduction

The new protocol developed by Anthropic, published in November 2024, is currently being very well received by the community. It has quickly become a de facto industry standard, and other companies developing LLM models have already implemented equivalent functionalities in their systems.

The Model Context Protocol (MCP) is a standardized communication protocol between applications and Large Language Models (LLMs) that enhances these artificial intelligences, enabling them to perform more complex tasks.

To simplify the explanation, think of it this way: although human intelligence is the most advanced we know, to answer a simple question like “What time is it?” we usually need a tool (a watch or a phone).

With LLMs, something similar happens, to answer more complex questions, they need to connect to applications or services that provide tools for obtaining information or performing actions. That is the core value that MCP provides.

This concept is not entirely new. Integrations between LLMs and tools to fetch data or execute actions have existed before, but most were ad hoc, specific, and non-reusable. What makes MCP interesting is that it defines a standard communication interface between any application or service and any AI model, simplifying the process and making connections portable and reusable.

Before MCP, each connection between an LLM and an application/service had to be built manually, one by one.

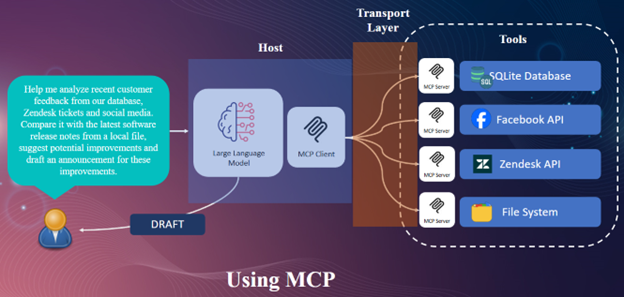

Now, let’s compare the previous diagram when using the Model Context Protocol

Now, with MCP, the LLM interacts through an MCP Client that connects to any MCP Server, providing a standardized transport layer between them. Applications and services only need to implement their own MCP Server to expose the list of Tools available for LLM to perform its tasks.

Since the release of this communication protocol between AI models and applications/services, the community and private companies have rapidly adopted it. Every modern application or service is now being developed with its own MCP Server or connector to provide tools and capabilities to AI models.

This creates an interesting scenario where an LLM is no longer limited to responding in plain text like a chatbot; it can now perform actions on real applications or services based on user requests.

The community has already developed thousands of connectors for almost any application, and many companies are also releasing official MCP Servers. As of the date of this article, more than 16,000 MCP Servers have been registered, creating a rich and expanding ecosystem.

If your company is considering integrating AI into its applications or services, this is one of the most promising paths to achieving this.

Some notable MCP Servers include:

All these and many more can be downloaded and installed for free on your MCP Client to connect them to an AI model.

This represents a significant step forward toward enabling AI models to perform complex and useful tasks that help optimize workflows and reduce repetitive tasks.

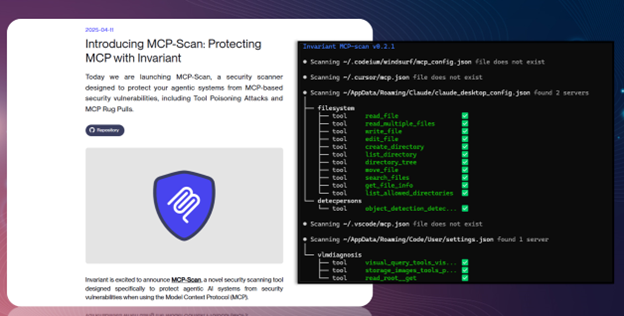

Not all MCP Servers are safe. As with any technological innovation, a careless or malicious developer could create a connector capable of performing harmful actions on applications, services, or even the user’s machine.

This is particularly critical in enterprise environments. As a result, tools have emerged to evaluate and certify that an MCP Server does not contain serious vulnerabilities.

One such tool is MCP-Scan, which analyzes installed MCP Servers on the client and generates a report of potential risks or insecure configurations.

The MCP Server orchestrates the interaction between language models and their environment, providing the communication layer. Its main function is to manage the flow of information between the model and various external sources or services, ensuring interoperability, security, and consistent context during task execution.

As described earlier, MCP relies on two main components, the client and the server.

The server is built around three key primitives:

While Tools are the most popular primitive, enabling concrete actions, Resources and Prompts extend the contextual understanding and simplify model interactions.

Not all MCP Clients implement the full set of protocol primitives. Compatibility for each can be checked on the official MCP Clients – Model Context Protocol (MCP)

Many clients currently lack support for Resources, one of the most valuable server-side primitives. This means that while Tools (executable functions) are widely supported, direct access to additional resources like files, datasets, or contextual data remains limited in many clients.

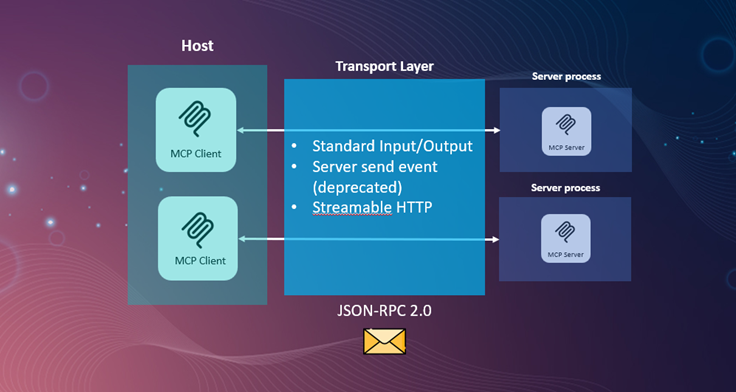

The transport layer handles how MCP Clients and Servers communicate within the ecosystem. Its primary responsibility is to transmit JSON-RPC 2.0 messages, the protocol’s base format, ensuring proper request, response, and event flow between processes.

Transport can be carried out through several mechanisms, each with its own specific advantages and limitations.

There are two main transport methods in MCP:

The following table shows the main advantages and disadvantages of using each protocol depending on the scenario in which it is applied.

| Transport | Type | Communication | Ideal For | Main Advantages | Limitations |

|---|---|---|---|---|---|

| STDIO | Local | Bidirectional | Local integrations (CLI, IDE) | Simplicity, low latency | Local only |

| Streamable HTTP | HTTP | Bidirectional | Remote, scalable connections | Security, flexibility | More complex, network overhead |

Together, these transport mechanisms ensure flexible and scalable communication between MCP Clients and Servers, from lightweight local integrations to large distributed deployments.

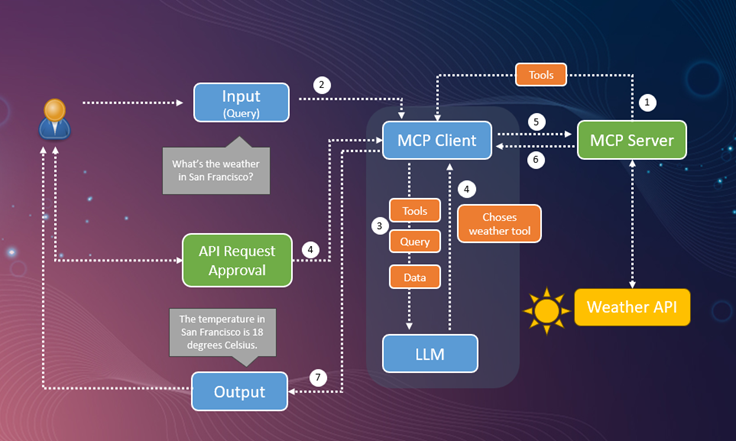

An MCP Client connects to one or multiple MCP Servers and manages communication between the LLM, the user, and the tools/resources provided by the servers.

Its primary role is to coordinate model requests, forward necessary data to the appropriate server, and return processed results to the model or user.

The following sequence outlines the typical interaction flow:

Thus, the client acts as a central orchestrator, combining the model’s reasoning with the technical capabilities of MCP Servers, ensuring smooth, secure, and extensible communication within the protocol.

In the following video, you can see the implementation of the first MCP Client (Avatar) developed by the Plain Concepts Research team.

In this demo, Chris, our avatar, can create primitives and 3D elements using the MCP Server connected to the Evergine graphics engine.

The Model Context Protocol (MCP) is establishing itself as a key communication standard in the field of artificial intelligence.

It provides a common structure that allows language models, tools, and data sources to connect and interact in a unified way.

This shared framework not only simplifies the development and integration of new capabilities but also fosters an interoperable environment where any model can access MCP-compatible tools, promoting a more open, modular, and scalable AI ecosystem.

Authors:

Rodrigo Cabello (Principal AI Researcher)

Github: https://github.com/mrcabellom

Linkedin: https://www.linkedin.com/in/rodrigocabello/

Jorge Cantón (Research Director)

Github: https://github.com/Jorgemagic/

Linkedin: https://www.linkedin.com/in/jorgecanton/

Plain Concepts

We are a global IT professional services company

| Cookie | Duration | Description |

|---|---|---|

| __cfduid | 1 year | The cookie is used by cdn services like CloudFare to identify individual clients behind a shared IP address and apply security settings on a per-client basis. It does not correspond to any user ID in the web application and does not store any personally identifiable information. |

| __cfduid | 29 days 23 hours 59 minutes | The cookie is used by cdn services like CloudFare to identify individual clients behind a shared IP address and apply security settings on a per-client basis. It does not correspond to any user ID in the web application and does not store any personally identifiable information. |

| __cfduid | 1 year | The cookie is used by cdn services like CloudFare to identify individual clients behind a shared IP address and apply security settings on a per-client basis. It does not correspond to any user ID in the web application and does not store any personally identifiable information. |

| __cfduid | 29 days 23 hours 59 minutes | The cookie is used by cdn services like CloudFare to identify individual clients behind a shared IP address and apply security settings on a per-client basis. It does not correspond to any user ID in the web application and does not store any personally identifiable information. |

| _ga | 1 year | This cookie is installed by Google Analytics. The cookie is used to calculate visitor, session, campaign data and keep track of site usage for the site's analytics report. The cookies store information anonymously and assign a randomly generated number to identify unique visitors. |

| _ga | 1 year | This cookie is installed by Google Analytics. The cookie is used to calculate visitor, session, campaign data and keep track of site usage for the site's analytics report. The cookies store information anonymously and assign a randomly generated number to identify unique visitors. |

| _ga | 1 year | This cookie is installed by Google Analytics. The cookie is used to calculate visitor, session, campaign data and keep track of site usage for the site's analytics report. The cookies store information anonymously and assign a randomly generated number to identify unique visitors. |

| _ga | 1 year | This cookie is installed by Google Analytics. The cookie is used to calculate visitor, session, campaign data and keep track of site usage for the site's analytics report. The cookies store information anonymously and assign a randomly generated number to identify unique visitors. |

| _gat_UA-326213-2 | 1 year | No description |

| _gat_UA-326213-2 | 1 year | No description |

| _gat_UA-326213-2 | 1 year | No description |

| _gat_UA-326213-2 | 1 year | No description |

| _gid | 1 year | This cookie is installed by Google Analytics. The cookie is used to store information of how visitors use a website and helps in creating an analytics report of how the wbsite is doing. The data collected including the number visitors, the source where they have come from, and the pages viisted in an anonymous form. |

| _gid | 1 year | This cookie is installed by Google Analytics. The cookie is used to store information of how visitors use a website and helps in creating an analytics report of how the wbsite is doing. The data collected including the number visitors, the source where they have come from, and the pages viisted in an anonymous form. |

| _gid | 1 year | This cookie is installed by Google Analytics. The cookie is used to store information of how visitors use a website and helps in creating an analytics report of how the wbsite is doing. The data collected including the number visitors, the source where they have come from, and the pages viisted in an anonymous form. |

| _gid | 1 year | This cookie is installed by Google Analytics. The cookie is used to store information of how visitors use a website and helps in creating an analytics report of how the wbsite is doing. The data collected including the number visitors, the source where they have come from, and the pages viisted in an anonymous form. |

| attributionCookie | session | No description |

| cookielawinfo-checkbox-analytics | 1 year | Set by the GDPR Cookie Consent plugin, this cookie is used to record the user consent for the cookies in the "Analytics" category . |

| cookielawinfo-checkbox-necessary | 1 year | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Necessary". |

| cookielawinfo-checkbox-necessary | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Necessary". |

| cookielawinfo-checkbox-necessary | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Necessary". |

| cookielawinfo-checkbox-necessary | 1 year | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Necessary". |

| cookielawinfo-checkbox-non-necessary | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Non Necessary". |

| cookielawinfo-checkbox-non-necessary | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Non Necessary". |

| cookielawinfo-checkbox-non-necessary | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Non Necessary". |

| cookielawinfo-checkbox-non-necessary | 1 year | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Non Necessary". |

| cookielawinfo-checkbox-performance | 1 year | Set by the GDPR Cookie Consent plugin, this cookie is used to store the user consent for cookies in the category "Performance". |

| cppro-ft | 1 year | No description |

| cppro-ft | 7 years 1 months 12 days 23 hours 59 minutes | No description |

| cppro-ft | 7 years 1 months 12 days 23 hours 59 minutes | No description |

| cppro-ft | 1 year | No description |

| cppro-ft-style | 1 year | No description |

| cppro-ft-style | 1 year | No description |

| cppro-ft-style | session | No description |

| cppro-ft-style | session | No description |

| cppro-ft-style-temp | 23 hours 59 minutes | No description |

| cppro-ft-style-temp | 23 hours 59 minutes | No description |

| cppro-ft-style-temp | 23 hours 59 minutes | No description |

| cppro-ft-style-temp | 1 year | No description |

| i18n | 10 years | No description available. |

| IE-jwt | 62 years 6 months 9 days 9 hours | No description |

| IE-LANG_CODE | 62 years 6 months 9 days 9 hours | No description |

| IE-set_country | 62 years 6 months 9 days 9 hours | No description |

| JSESSIONID | session | The JSESSIONID cookie is used by New Relic to store a session identifier so that New Relic can monitor session counts for an application. |

| viewed_cookie_policy | 11 months | The cookie is set by the GDPR Cookie Consent plugin and is used to store whether or not user has consented to the use of cookies. It does not store any personal data. |

| viewed_cookie_policy | 1 year | The cookie is set by the GDPR Cookie Consent plugin and is used to store whether or not user has consented to the use of cookies. It does not store any personal data. |

| viewed_cookie_policy | 1 year | The cookie is set by the GDPR Cookie Consent plugin and is used to store whether or not user has consented to the use of cookies. It does not store any personal data. |

| viewed_cookie_policy | 11 months | The cookie is set by the GDPR Cookie Consent plugin and is used to store whether or not user has consented to the use of cookies. It does not store any personal data. |

| VISITOR_INFO1_LIVE | 5 months 27 days | A cookie set by YouTube to measure bandwidth that determines whether the user gets the new or old player interface. |

| wmc | 9 years 11 months 30 days 11 hours 59 minutes | No description |

| Cookie | Duration | Description |

|---|---|---|

| __cf_bm | 30 minutes | This cookie, set by Cloudflare, is used to support Cloudflare Bot Management. |

| sp_landing | 1 day | The sp_landing is set by Spotify to implement audio content from Spotify on the website and also registers information on user interaction related to the audio content. |

| sp_t | 1 year | The sp_t cookie is set by Spotify to implement audio content from Spotify on the website and also registers information on user interaction related to the audio content. |

| Cookie | Duration | Description |

|---|---|---|

| _hjAbsoluteSessionInProgress | 1 year | No description |

| _hjAbsoluteSessionInProgress | 1 year | No description |

| _hjAbsoluteSessionInProgress | 1 year | No description |

| _hjAbsoluteSessionInProgress | 1 year | No description |

| _hjFirstSeen | 29 minutes | No description |

| _hjFirstSeen | 29 minutes | No description |

| _hjFirstSeen | 29 minutes | No description |

| _hjFirstSeen | 1 year | No description |

| _hjid | 11 months 29 days 23 hours 59 minutes | This cookie is set by Hotjar. This cookie is set when the customer first lands on a page with the Hotjar script. It is used to persist the random user ID, unique to that site on the browser. This ensures that behavior in subsequent visits to the same site will be attributed to the same user ID. |

| _hjid | 11 months 29 days 23 hours 59 minutes | This cookie is set by Hotjar. This cookie is set when the customer first lands on a page with the Hotjar script. It is used to persist the random user ID, unique to that site on the browser. This ensures that behavior in subsequent visits to the same site will be attributed to the same user ID. |

| _hjid | 1 year | This cookie is set by Hotjar. This cookie is set when the customer first lands on a page with the Hotjar script. It is used to persist the random user ID, unique to that site on the browser. This ensures that behavior in subsequent visits to the same site will be attributed to the same user ID. |

| _hjid | 1 year | This cookie is set by Hotjar. This cookie is set when the customer first lands on a page with the Hotjar script. It is used to persist the random user ID, unique to that site on the browser. This ensures that behavior in subsequent visits to the same site will be attributed to the same user ID. |

| _hjIncludedInPageviewSample | 1 year | No description |

| _hjIncludedInPageviewSample | 1 year | No description |

| _hjIncludedInPageviewSample | 1 year | No description |

| _hjIncludedInPageviewSample | 1 year | No description |

| _hjSession_1776154 | session | No description |

| _hjSessionUser_1776154 | session | No description |

| _hjTLDTest | 1 year | No description |

| _hjTLDTest | 1 year | No description |

| _hjTLDTest | session | No description |

| _hjTLDTest | session | No description |

| _lfa_test_cookie_stored | past | No description |

| Cookie | Duration | Description |

|---|---|---|

| loglevel | never | No description available. |

| prism_90878714 | 1 month | No description |

| redirectFacebook | 2 minutes | No description |

| YSC | session | YSC cookie is set by Youtube and is used to track the views of embedded videos on Youtube pages. |

| yt-remote-connected-devices | never | YouTube sets this cookie to store the video preferences of the user using embedded YouTube video. |

| yt-remote-device-id | never | YouTube sets this cookie to store the video preferences of the user using embedded YouTube video. |

| yt.innertube::nextId | never | This cookie, set by YouTube, registers a unique ID to store data on what videos from YouTube the user has seen. |

| yt.innertube::requests | never | This cookie, set by YouTube, registers a unique ID to store data on what videos from YouTube the user has seen. |