Elena Canorea

Communications Lead

As one of the two major events that Microsoft holds every year, Microsoft Build is the developer conference in which the company announces the last updates on its tools and services. Although it is usually an in-person event, due to the COVID-19 worldwide crisis, Microsoft decided that this year it would consist of 48 hours of online sessions available to anyone to register.

Immediately some questions started to arise: Because speakers cannot travel, Microsoft needed to record them but, how could they ensure the quality of image and audio? Where are the speakers going to be recorded, at their home? How will the speaker surroundings look like when published, and what feeling will they project into the attendees?

These and many other related questions are usually solved by recording in a professional studio or stage like a television set, with a professional camera assistant and lightning, which was not possible in the pandemic context. Fortunately, we found a solution we call: Virtual Stage.

An application that leverages the power of Azure Kinect and AI’s latest breakthroughs to record the speakers at their homes like they were in a professional recording studio talking behind a green screen. The recordings are later sent to post-production where virtual stages, animations, and compositions can easily be performed: Imagination rules!

Huge thanks to the team, to the amazing devs at @plainconcepts, and the researchers at @UW for their work that helped us keep speakers safe at home at #MSBuild. Try it out for your own presentations! https://t.co/s6AEMYIWVq

This technology developed in collaboration with Microsoft Corp. consists of two separate parts:

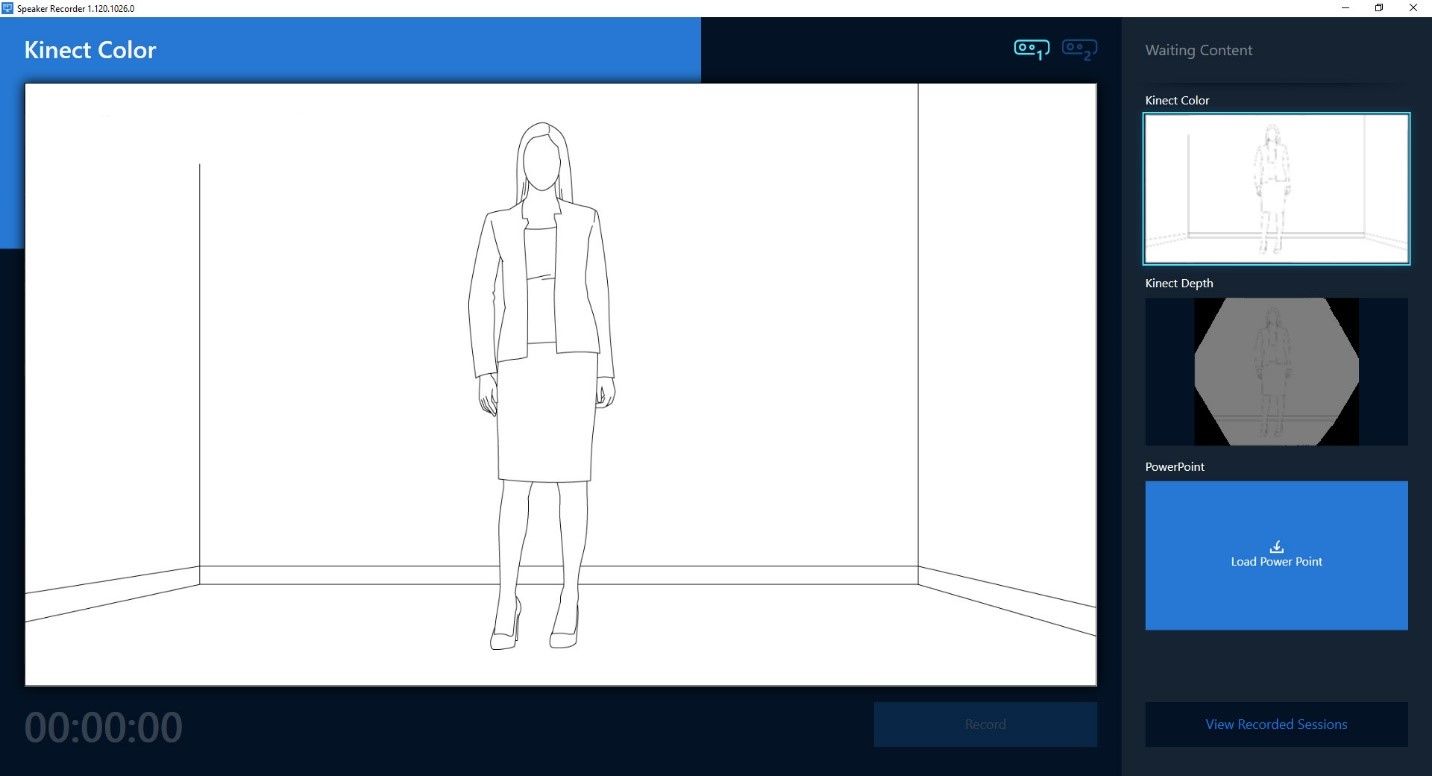

The Speaker Recorder App, that allows to record a lecture using one or two Azure Kinect devices, and Background Matting, the backend that removes the background with great quality using a sophisticated AI model and the information of the Azure Kinect sensors.

The Speaker Recorder application captures the color, depth information of one or two Azure Kinect cameras (you can use two cameras to record two angles of the same lecture). The speaker can additionally use a presenter to move through PowerPoint slides and a wireless microphone, which are recorded as well as a video. When finished, the videos are uploaded to Azure, ready to be processed.

At Azure, the Background Matting service uses the depth information to generate an imprecise segmentation of the speaker. Then the backend separates the background from the speaker, with high precision, generating a transparent video.

The Virtual Stage Background Matting is based on a brand-new technique from the University of Washington. Due to the lack of labeled training data portraying standing humans, original AI was trained with 512×512 square images/videos until the hip or knee-length, resulting in poor quality when matting full HD standing human videos.

In order to get high quality foreground in zones like hair, hands, or feet we have made two major contributions to the original method. First, we have replaced the original segmentation step by the AI models of the Azure Body Tracking SDK, getting a segmentation that is more tolerant of color similarities and ambiguous zones of the image.

After that, we are splitting the body into two square images with a small overlapping and processing them separately. This allows the model to “see” better in difficult zones like the shadow between the feet, without losing precision in hair or hands.

To download the code, test it, or get more technical details please check out Github.

If you want to know more about our technical marketing services visit this page. Or if you want more information about the technology we developed in collaboration with Microsoft Corp, contact us here!

Elena Canorea

Communications Lead

| Cookie | Duration | Description |

|---|---|---|

| __cfduid | 1 year | The cookie is used by cdn services like CloudFare to identify individual clients behind a shared IP address and apply security settings on a per-client basis. It does not correspond to any user ID in the web application and does not store any personally identifiable information. |

| __cfduid | 29 days 23 hours 59 minutes | The cookie is used by cdn services like CloudFare to identify individual clients behind a shared IP address and apply security settings on a per-client basis. It does not correspond to any user ID in the web application and does not store any personally identifiable information. |

| __cfduid | 1 year | The cookie is used by cdn services like CloudFare to identify individual clients behind a shared IP address and apply security settings on a per-client basis. It does not correspond to any user ID in the web application and does not store any personally identifiable information. |

| __cfduid | 29 days 23 hours 59 minutes | The cookie is used by cdn services like CloudFare to identify individual clients behind a shared IP address and apply security settings on a per-client basis. It does not correspond to any user ID in the web application and does not store any personally identifiable information. |

| _ga | 1 year | This cookie is installed by Google Analytics. The cookie is used to calculate visitor, session, campaign data and keep track of site usage for the site's analytics report. The cookies store information anonymously and assign a randomly generated number to identify unique visitors. |

| _ga | 1 year | This cookie is installed by Google Analytics. The cookie is used to calculate visitor, session, campaign data and keep track of site usage for the site's analytics report. The cookies store information anonymously and assign a randomly generated number to identify unique visitors. |

| _ga | 1 year | This cookie is installed by Google Analytics. The cookie is used to calculate visitor, session, campaign data and keep track of site usage for the site's analytics report. The cookies store information anonymously and assign a randomly generated number to identify unique visitors. |

| _ga | 1 year | This cookie is installed by Google Analytics. The cookie is used to calculate visitor, session, campaign data and keep track of site usage for the site's analytics report. The cookies store information anonymously and assign a randomly generated number to identify unique visitors. |

| _gat_UA-326213-2 | 1 year | No description |

| _gat_UA-326213-2 | 1 year | No description |

| _gat_UA-326213-2 | 1 year | No description |

| _gat_UA-326213-2 | 1 year | No description |

| _gid | 1 year | This cookie is installed by Google Analytics. The cookie is used to store information of how visitors use a website and helps in creating an analytics report of how the wbsite is doing. The data collected including the number visitors, the source where they have come from, and the pages viisted in an anonymous form. |

| _gid | 1 year | This cookie is installed by Google Analytics. The cookie is used to store information of how visitors use a website and helps in creating an analytics report of how the wbsite is doing. The data collected including the number visitors, the source where they have come from, and the pages viisted in an anonymous form. |

| _gid | 1 year | This cookie is installed by Google Analytics. The cookie is used to store information of how visitors use a website and helps in creating an analytics report of how the wbsite is doing. The data collected including the number visitors, the source where they have come from, and the pages viisted in an anonymous form. |

| _gid | 1 year | This cookie is installed by Google Analytics. The cookie is used to store information of how visitors use a website and helps in creating an analytics report of how the wbsite is doing. The data collected including the number visitors, the source where they have come from, and the pages viisted in an anonymous form. |

| attributionCookie | session | No description |

| cookielawinfo-checkbox-analytics | 1 year | Set by the GDPR Cookie Consent plugin, this cookie is used to record the user consent for the cookies in the "Analytics" category . |

| cookielawinfo-checkbox-necessary | 1 year | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Necessary". |

| cookielawinfo-checkbox-necessary | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Necessary". |

| cookielawinfo-checkbox-necessary | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Necessary". |

| cookielawinfo-checkbox-necessary | 1 year | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Necessary". |

| cookielawinfo-checkbox-non-necessary | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Non Necessary". |

| cookielawinfo-checkbox-non-necessary | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Non Necessary". |

| cookielawinfo-checkbox-non-necessary | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Non Necessary". |

| cookielawinfo-checkbox-non-necessary | 1 year | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Non Necessary". |

| cookielawinfo-checkbox-performance | 1 year | Set by the GDPR Cookie Consent plugin, this cookie is used to store the user consent for cookies in the category "Performance". |

| cppro-ft | 1 year | No description |

| cppro-ft | 7 years 1 months 12 days 23 hours 59 minutes | No description |

| cppro-ft | 7 years 1 months 12 days 23 hours 59 minutes | No description |

| cppro-ft | 1 year | No description |

| cppro-ft-style | 1 year | No description |

| cppro-ft-style | 1 year | No description |

| cppro-ft-style | session | No description |

| cppro-ft-style | session | No description |

| cppro-ft-style-temp | 23 hours 59 minutes | No description |

| cppro-ft-style-temp | 23 hours 59 minutes | No description |

| cppro-ft-style-temp | 23 hours 59 minutes | No description |

| cppro-ft-style-temp | 1 year | No description |

| i18n | 10 years | No description available. |

| IE-jwt | 62 years 6 months 9 days 9 hours | No description |

| IE-LANG_CODE | 62 years 6 months 9 days 9 hours | No description |

| IE-set_country | 62 years 6 months 9 days 9 hours | No description |

| JSESSIONID | session | The JSESSIONID cookie is used by New Relic to store a session identifier so that New Relic can monitor session counts for an application. |

| viewed_cookie_policy | 11 months | The cookie is set by the GDPR Cookie Consent plugin and is used to store whether or not user has consented to the use of cookies. It does not store any personal data. |

| viewed_cookie_policy | 1 year | The cookie is set by the GDPR Cookie Consent plugin and is used to store whether or not user has consented to the use of cookies. It does not store any personal data. |

| viewed_cookie_policy | 1 year | The cookie is set by the GDPR Cookie Consent plugin and is used to store whether or not user has consented to the use of cookies. It does not store any personal data. |

| viewed_cookie_policy | 11 months | The cookie is set by the GDPR Cookie Consent plugin and is used to store whether or not user has consented to the use of cookies. It does not store any personal data. |

| VISITOR_INFO1_LIVE | 5 months 27 days | A cookie set by YouTube to measure bandwidth that determines whether the user gets the new or old player interface. |

| wmc | 9 years 11 months 30 days 11 hours 59 minutes | No description |

| Cookie | Duration | Description |

|---|---|---|

| __cf_bm | 30 minutes | This cookie, set by Cloudflare, is used to support Cloudflare Bot Management. |

| sp_landing | 1 day | The sp_landing is set by Spotify to implement audio content from Spotify on the website and also registers information on user interaction related to the audio content. |

| sp_t | 1 year | The sp_t cookie is set by Spotify to implement audio content from Spotify on the website and also registers information on user interaction related to the audio content. |

| Cookie | Duration | Description |

|---|---|---|

| _hjAbsoluteSessionInProgress | 1 year | No description |

| _hjAbsoluteSessionInProgress | 1 year | No description |

| _hjAbsoluteSessionInProgress | 1 year | No description |

| _hjAbsoluteSessionInProgress | 1 year | No description |

| _hjFirstSeen | 29 minutes | No description |

| _hjFirstSeen | 29 minutes | No description |

| _hjFirstSeen | 29 minutes | No description |

| _hjFirstSeen | 1 year | No description |

| _hjid | 11 months 29 days 23 hours 59 minutes | This cookie is set by Hotjar. This cookie is set when the customer first lands on a page with the Hotjar script. It is used to persist the random user ID, unique to that site on the browser. This ensures that behavior in subsequent visits to the same site will be attributed to the same user ID. |

| _hjid | 11 months 29 days 23 hours 59 minutes | This cookie is set by Hotjar. This cookie is set when the customer first lands on a page with the Hotjar script. It is used to persist the random user ID, unique to that site on the browser. This ensures that behavior in subsequent visits to the same site will be attributed to the same user ID. |

| _hjid | 1 year | This cookie is set by Hotjar. This cookie is set when the customer first lands on a page with the Hotjar script. It is used to persist the random user ID, unique to that site on the browser. This ensures that behavior in subsequent visits to the same site will be attributed to the same user ID. |

| _hjid | 1 year | This cookie is set by Hotjar. This cookie is set when the customer first lands on a page with the Hotjar script. It is used to persist the random user ID, unique to that site on the browser. This ensures that behavior in subsequent visits to the same site will be attributed to the same user ID. |

| _hjIncludedInPageviewSample | 1 year | No description |

| _hjIncludedInPageviewSample | 1 year | No description |

| _hjIncludedInPageviewSample | 1 year | No description |

| _hjIncludedInPageviewSample | 1 year | No description |

| _hjSession_1776154 | session | No description |

| _hjSessionUser_1776154 | session | No description |

| _hjTLDTest | 1 year | No description |

| _hjTLDTest | 1 year | No description |

| _hjTLDTest | session | No description |

| _hjTLDTest | session | No description |

| _lfa_test_cookie_stored | past | No description |

| Cookie | Duration | Description |

|---|---|---|

| loglevel | never | No description available. |

| prism_90878714 | 1 month | No description |

| redirectFacebook | 2 minutes | No description |

| YSC | session | YSC cookie is set by Youtube and is used to track the views of embedded videos on Youtube pages. |

| yt-remote-connected-devices | never | YouTube sets this cookie to store the video preferences of the user using embedded YouTube video. |

| yt-remote-device-id | never | YouTube sets this cookie to store the video preferences of the user using embedded YouTube video. |

| yt.innertube::nextId | never | This cookie, set by YouTube, registers a unique ID to store data on what videos from YouTube the user has seen. |

| yt.innertube::requests | never | This cookie, set by YouTube, registers a unique ID to store data on what videos from YouTube the user has seen. |